So, here's the awkward truth: after weeks of research into LXC, conversations with some pretty experienced DevOps engineers, and even briefly reconsidering Kubernetes, Magic Pages is going back to Docker Swarm.

Yes, the same Docker Swarm I ditched over a year ago. The same technology that half the internet will tell you is "dead." The same orchestrator that made me roll my eyes several times on this blog.

But I'm genuinely excited about it.

Let's explore why.

The Signals

The first Signal on why LXC might be a bad choise came from Ghost itself. Over the past few months, I've noticed something interesting happening in Ghost's development ecosystem. More and more of their tooling is being containerised – but not just any containers. Docker containers.

The Ghost core itself, new features they're working on, even their ActivityPub integration – it's all being built with Docker Compose stacks. I would be stupid to ignore these signals.

Think about it: if I moved to LXC, I'd probably end up running Docker inside those containers anyway. Yeah, we'd have our townhouse – just to convert it into apartments. Making things worse.

The second signal was louder. I talked to a DevOps engineer who's been running LXC in production for several years. I expected stories of triumph, tips for optimisation, maybe some battle scars.

What I got instead was a reality check.

"It works," he said, "but..." That 'but' was followed by tales of growing pains, limited community support, and edge cases over edge cases. For a large organisation with a dedicated ops team? Sure, they could handle it. For a one-person company like Magic Pages? He gently suggested I stick to industry standards.

His words stuck with me: "When you're running solo, you want boring technology. You want the thing that millions of others are using, where every problem you'll face has already been solved by someone else."

That hit home. And he was right.

The Kubernetes Temptation

So there I was, back in Docker land. And a dangerous thought crept in: "Well, if I'm using Docker anyway, why not stick with Kubernetes?"

I contemplated this for a few hours. After all, the new CephFS storage is working so smooth, I haven't had a single alarm since Monday afternoon. Maybe I could make it work with dedicated servers for better efficiency?

But then I hit the same wall that makes Kubernetes feel like bringing a Formula 1 car to pick up groceries. The complexity is still there. Pod density limits in kubelet. Network overlays. Service meshes. It's powerful, but it's also... a lot.

I need something simpler. Something that lets me deploy hundreds of Ghost sites reliably without worrying whether one wrong command can bring down an entire cluster.

Enter Kamal (Exit Kamal)

My search led me to Kamal, 37signals' deployment tool. It promised simple, straightforward deployments. No orchestrator overhead. Just Docker, SSH, and some smart conventions.

I was excited. 37signals knows how to build things that last. But as I dug deeper, I realized Kamal solves a different problem than mine. It's brilliant for deploying one application to many servers – perfect for scaling 37signal's products. But I need the opposite: deploying many applications (customer Ghost sites) with built-in failover and load distribution.

The Plot Twist

And then, in what might be the least exciting plot twist in infrastructure history, I found myself looking at...Docker Swarm.

Yes, Docker Swarm. The technology I abandoned over a year ago. The thing that everyone says you shouldn't use anymore. The orchestrator that even Docker seems to have given up on promoting (though, admitettly, there is still a TON of documentation).

But here's what's funny about coming full circle: you see things differently the second time around.

Remember why I left Docker Swarm? It was network limitations. Maximum 256 IP addresses on the default overlay network. With two containers per Ghost site, I hit the ceiling at around 120 sites.

But sitting there, a year and a half later I thought...why not just create a bigger network? 🤷

I mean, seriously. It's that simple. Instead of using the default network, you create your own overlay network with a larger IP space.

I tested it immediately. Spun up a fresh Docker Swarm cluster using an existing Ansible module (10 minutes, boom, done). Created a custom overlay network with proper IP allocation. Then I started scaling an nginx container for fun. 1replica. 100 replicas. Then 1,000. Then – just because I could – 10,000.

The cluster didn't even blink. It just worked.

Yes, Docker Swarm is not sexy. Nobody's writing excited blog posts about it. There are no trendy conferences dedicated to it. It's the infrastructure equivalent of a reliable 10 year-old Toyota in a world obsessed with the latest EVs.

It works. And more importantly, it works with standard Docker commands. No special languages to learn. No complex abstractions. Just Docker, with some clustering fairy dust on top.

Within a day, I had a working proof of concept:

- Three dedicated servers in a Swarm cluster.

- Connected to my existing CephFS storage cluster.

- Hetzner load balancer distributing traffic.

- Proper firewalls and security hardening.

- And most importantly: Ghost sites running happily.

Being the data nerd I am, I couldn't resist running some comparisons. I deployed the same Ghost sites on both my current Kubernetes cluster and the new Docker Swarm setup, connected them to the same databases, and ran 100 load tests on each (with properly clearing cache in between to not skew the results).

The result? Docker Swarm was 15% faster.

It's not a massive difference. But it's faster, simpler, and uses fewer resources. Sometimes, boring wins.

So here I am, choosing Docker Swarm in 2025. Lol.

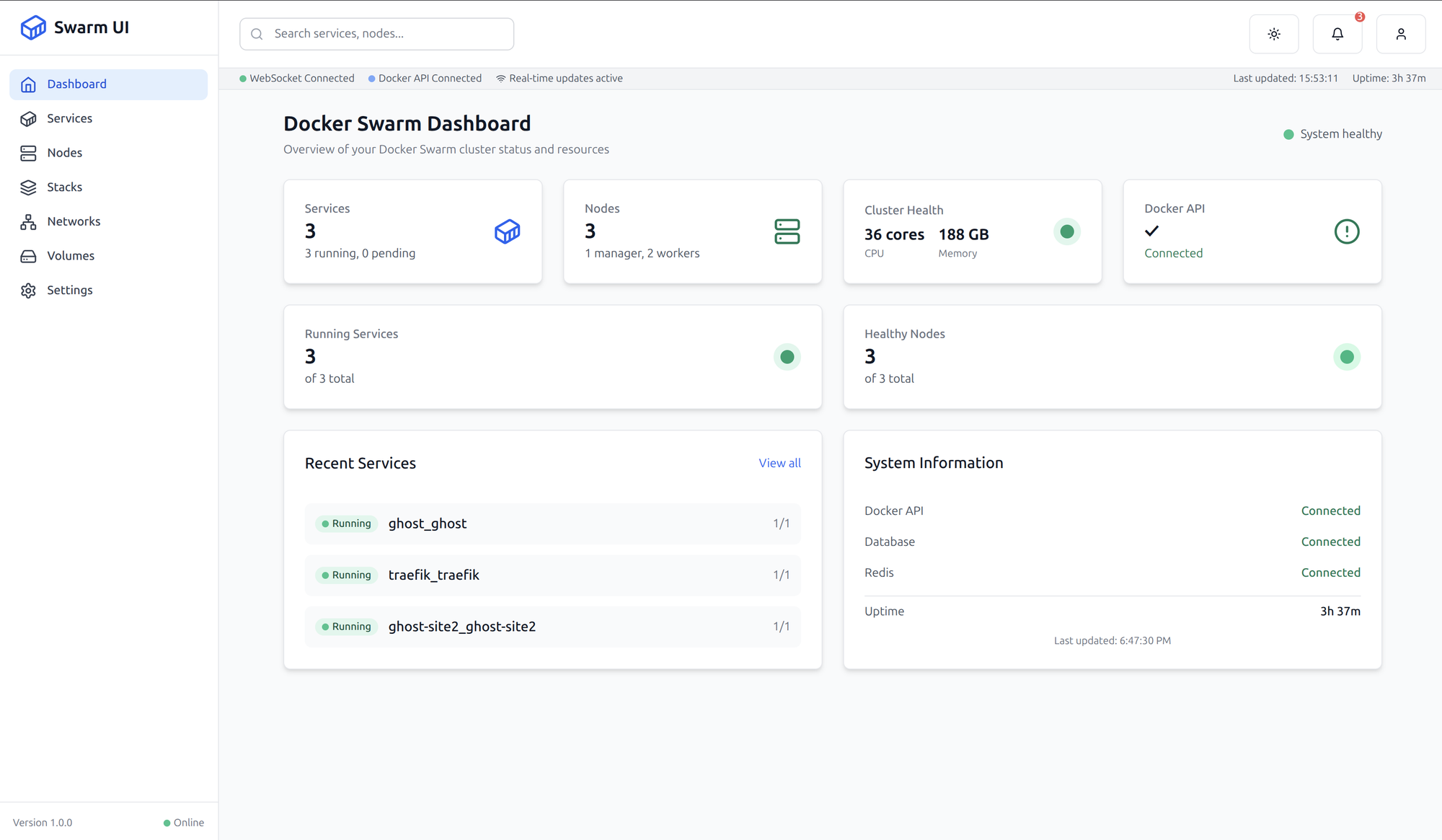

Next up: how can I manage all of this? Last time around, it was all done through a Portainer instance. But maybe there's a better way to have a UI (yeah, I do love those)?

Let's just say...

(and yeah, that's a cliffhanger – bye 👋)